Installed on Alpine Linux, got most of the way there…

First issue was related to BusyBox version of ‘cp’ command. The setup script failed doing cp --backup=numbered, but adding the version of cp found in coreutils fixed that issue.

apk add --upgrade coreutils

Second issue was related to a printf command that BusyBox didn’t like. A quick addition of the appropriate package fixed that too.

apk -U add findutils

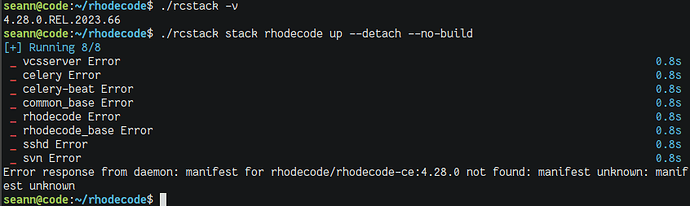

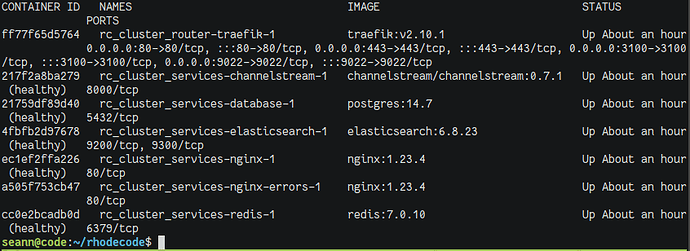

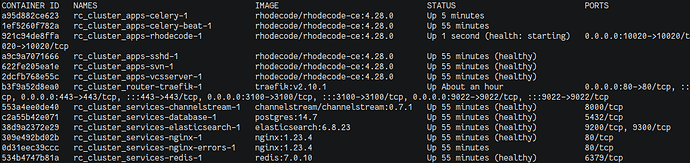

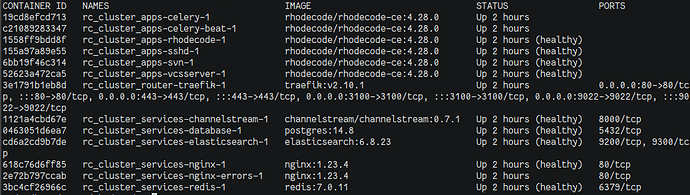

The setup finally succeeded, and I was able to run ./rcstack init. Also, the router and services stacks were setup fine.

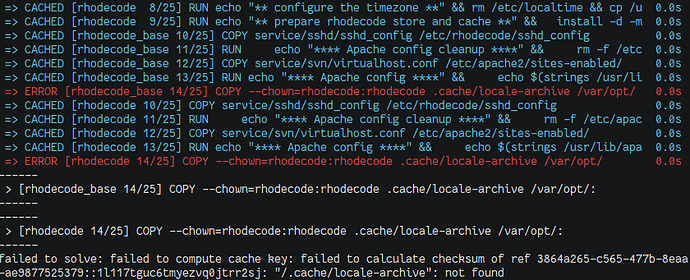

However, executing ./rcstack stack rhodecode up --detach results with this error:

=> ERROR [rhodecode_base 14/25] COPY --chown=rhodecode:rhodecode .cache/locale-archive /var/opt/ 0.0s

And:

failed to solve: failed to compute cache key: failed to calculate checksum of ref moby::q01k4c85arg7b8skxl0lpj5si: "/.cache/locale-archive": not found

If I dig into the service/rhodecode/rhodecode.dockerfile and comment the line mentioned above, the setup gets a little further, then fails again, likely because essential steps were skipped.

So, the Docker setup is not super intuitive, however, it appears that it will work if a few errors are resolved. The initial errors are related to using Alpine as a minimalist Docker platform, which may not affect many users and likely doesn’t require mitigation, however it seems the errors encountered while starting the Rhodecode container are happening inside the container.

Also, I am not certain how to proceed once the setup is complete, as I don’t see any further documentation on the Docker file. There are a few more moving parts with the Docker setup, like Prometheus and Grafana, that are not part of the standard installation. However, getting started might make sense once consulting the standard docs.

Then again, it could be user error. Please let me know if this is the case…